by DavidSpratt | Jul 10, 2018 | ICT

Data protection is the process of safeguarding important information from corruption, compromise or loss.

Many of us will have watched with some concern the ongoing reports of hacking, ransomware (where a hacker locks or encrypts your company data and demands a ransom before releasing it) and data theft by outside agencies.

IT Security Threats Pose New Risks for Owners and Directors

As owners and Directors of businesses in this country, we cannot ignore the real risks presented to our companies by theft or destruction of company data. Stricter laws governing Director’s responsibility make risk management and mitigation very personal.

Henri Elliot, Founder and CEO of Board Dynamics commented to me recently, “It is essential Directors take a strong position on all forms of risk. Risk should be on the Board’s agenda each month and should be appropriately categorised. For example – is a staff member taking a list of clients a company policy issue? An HR issue? An IT security issue? In truth, it is all of the above and Directors need to take a holistic approach.”

Security Risks Are Mainly Internal

The scary thing when we consider the risks around IT is that it is not the sneaky Russians or the depraved teenage geeks who represent the real threat to most businesses. In fact, it’s often quiet Jane from Finance or good old reliable Mac from Sales who represent the real and present danger.

If you think I am being a bit dramatic (and my wife would agree with you) think again. Here are a few things that should give you food for thought.

Nearly two-thirds of employees surveyed, who leave an organisation voluntarily or involuntarily, say they take sensitive data with them.

That is a real wake-up call when you consider that your staff will almost inevitably have access to sales and customer records, design secrets and new product plans.

Nine out of ten Information Technology (IT) staff surveyed indicated that if they lost their jobs whether through redundancy or by firing would take sensitive company data with them.

Techies are extra smart, often socially inept and prone to impulsive behaviour when stressed. Just because Jason the geek is a bit dishevelled in the morning doesn’t mean he is not capable of revenge served cold.

So how does Jane, Mac or Jason walk out the door with your most valuable secrets? In truth, they probably don’t. Your worst enemy is email. Over a quarter of data, thefts have been as simple as attaching a file to an email and sending it home or to a friend.

Next on the IT security threat list for most small to medium businesses is that convenient friend, the USB stick. In many cases, these data downloads start quite innocently with your trusted person downloading files, so they can work from home. It’s only when they are preparing to leave that the true value of the customer list they downloaded becomes clear.

I can dwell on ways you can lose your company data, but in truth, this only serves to make you overly fearful. Instead, let’s look at a couple of the signals that your data may be at risk.

Signals Your Data Might Be at Risk

Negative Work Events

Laying off or firing staff, whatever the reason should be a signal that your data is at risk. A huge proportion of internal IT security failures come from a desire for revenge. If you are planning to terminate a staff member it is important that you monitor that person’s behaviour. A surge in large data files being downloaded or emails to an unusual address should be a huge red flag.

Complacency

In many cases, data security failures are just a case of staff members, managers, or owners who just don’t get it. Data is valuable only if you see it that way.

The signals of complacency are often clear. You should be troubled by people violating simple IT security policies like keeping passwords protected. It is the company who will pay and the staff who end up with their jobs at risk if you ignore the knowingly irresponsible behaviour.

Next month I will run through the key things you can do to reduce the risk of insider security threats without treating your much-loved people as if they are criminals.

by Jon Rabinowitz | Mar 23, 2018 | Energy, ICT

A client of ours recently installed energy sensors across two areas of their facility. One area is significantly old using good practice equipment for the time, the other brand new and utilising advancements in equipment technology. Both areas are similarly sized and perform the same operation, however, measuring energy performance between the old and new will provide our client with real insights when making future strategic decisions.

While our client operates a large portfolio of facilities around the North Island, they are using this specific site as a sandbox environment, a testbed to trial new initiatives as they look to upgrade and replace existing equipment at their other facilities.

Utilising real-time energy data to measure performance against a range of benchmarks will allow them to verify performance gains and deliver insights into which areas should be prioritised in their long-term business plan.

Non-intrusive wireless energy sensors that can be easily moved to measure other areas, combined with powerful cloud-based software reporting tools provide a cost-effective and flexible way to build business cases.

The following article, written by Jon Rabinowitz at Panoramic Power, highlights the fact that, with Internet of Things (IoT), businesses can now test ideas in a quick and cost-effective manner while collecting valuable data for future decision making.

The Internet of Things has exploded onto the scene and with it a slew of potential business applications. In navigating this terra nova, most decision makers take their cues from the competition, afraid of wading too far into the unknown. This is reasonable, of course, but it’s also a big mistake.

Smart business owners and managers should know that they don’t need to resign themselves to the role of a follower in order to hedge their bets and mitigate their exposure to risk. You can lead and be cautious at the same time!

A False Dichotomy in Applied Internet of Things Investment

Consider, for example, the business value of smart, self-reporting assets. These assets hold the promise of constantly refined operational processes, reduced maintenance costs (as issues are caught and corrected in the earliest stages before degradation occurs), extended lifecycles and the elimination of unplanned downtime.

Still, few things ever go exactly according to plan and deliver quite as advertised. So it’s understandable that prudent decision makers might set expectations below the promised value. Add to that the fact that overhauling and replacing the entirety of your asset infrastructure is incredibly expensive and a terrible disruption to operations.

It’s easy to see why some business owners and managers might prefer to sit back and let “the other guys” take the lead in implementing Internet of Things into their business operations. But easy to see and right are two very different things.

The right approach is significantly more nuanced, as the rationale presented above is built on a false dichotomy. Your choice isn’t between sitting back and doing only what the other guy already succeeded at or totally replacing all your critical assets. There’s a world of options spanning the divide between those two.

The Golden IoT Mean: New Operational Intelligence, Old Equipment

Science and technology are both predicated on the principle of testing and your business should be the same. It always makes sense to “pilot” new technologies or techniques before deploying them at large. Beyond that though, using advanced Internet of Things technologies and tools, you can infuse new operational intelligence into old equipment without replacing anything.

Until your industry has reached a “mature” state in its development and integration of IoT technologies, this is the best way to mitigate risk without forfeiting access to value while it’s still a comparative advantage.

Using smart, non-intrusive energy sensors – each about the size of a 9-volt battery – you could retrofit past-gen assets to enable next-gen operational intelligence. Simply snap a sensor onto the circuit feeding the intended asset. No need to suspend operations; no need for complicated installation.

After your sensors are in place, enter the corresponding ID numbers into the mapping console. Immediately, these sensors will begin reporting granular energy data, pumped through an advanced, machine-learning analytics platform, and turning out new operational intelligence to be acted upon.

In this manner, facility managers can give a voice to their critical assets, allowing for advanced operational automation, predictive maintenance and generally increased production.

by Jon Rabinowitz | Feb 16, 2018 | Energy, ICT

In the past couple of years, we have written a lot of commentary about how the world of IT and the world of Energy are converging. As more and more companies are choosing not to own and operate their IT, the role of IT departments is fast moving away from just technical support towards strategic thinking informed by data analysis. The same can be said for Energy as companies gain access to wireless energy monitoring via cloud-based analytics, CIO’s and IT managers have equal interest as COO’s and plant managers as the Internet of Things is rolled out.

The convergence of data across all areas of a business is quickly growing and how this data is used to inform executive as well as operational level decision making and planning. In the last 12 months, Total Utilities has installed several hundred wireless energy sensors around New Zealand. Data from these energy sensors are being used across numerous areas from cost allocation, performance and product benchmarking, energy efficiency, sustainability reporting, preventative maintenance and tenant rebilling.

The convergence of data across all areas of a business is quickly growing and how this data is used to inform executive as well as operational level decision making and planning. In the last 12 months, Total Utilities has installed several hundred wireless energy sensors around New Zealand. Data from these energy sensors are being used across numerous areas from cost allocation, performance and product benchmarking, energy efficiency, sustainability reporting, preventative maintenance and tenant rebilling.

The following article was written by Jon Rabinowitz and underlines the need for cross-section planning and acceptance to ensure IoT success. Total Utilities can help your business through this planning process to ensure that key stakeholders are part of your IoT journey.

The most effective way to derive value from sensor technology and big data is to ensure you can analyze and act on the information gathered.

When sensors are deployed as data capture/communication instruments and paired with best-in-class Big Data analytics, the result is the life breath of value-driven Internet of Things technology. Unfortunately, more often than not, IoT systems are rolled out without proper planning, and simply so some manager can check a box and say that he or she undertook an IoT initiative.

When organizations are driven by hype, that value-generating substance generally falls by the wayside. Too often this is the case with IoT projects pursued for their buzz-worthiness, without due attention paid to the smart sensor and Big Data nuts and bolts of the thing.

Indeed, IoT for IoT’s sake can only get you so far. Ganesh Ramamoorthy, a principal research analyst at Gartner, told The Economic Times that “8 out of 10 IoT projects fail even before they’re launched.” If we’re to believe that figure, it begs the question, what are companies doing wrong?

Why Companies Fail In Putting Sensors and Big Data to Work

Compelled by topical hype, there’s often a sense of urgency associated with IoT projects. According to Mark Lochmann, a senior consultant at Qittitut Consulting, that self-imposed urgency gets the best of organizations. In an interview with IndustryWeek, Lochmann explains that many enterprises “dive headfirst” into Internet of Things projects without really understanding how the technology affects their operations.

Too often decision-makers get themselves drunk on buzz and embark on technology rollouts, without really understanding what needs to happen and how that tech needs to tie back into business operations in order to derive value. They simply neglected to consider how installing hundreds, possibly thousands of sensors across their business is supposed to portend an improved bottom line.

To avoid missteps, be sure to consider the following practical factors:

- Where the sensors will process the data (point of creation, edge devices, in the Cloud, etc.)

- Which tools analysts need to visualize and interpret data.

- How the company will validate and cleanse sensor information.

- The data governance policies which will protect data.

- The infrastructure necessary to support analysis (data warehousing, data mart, extract transport load servers, etc.).

Lacking wherewithal on these basic project components, research from PricewaterhouseCoopers and Iron Mountain reveals that only 4% of enterprises “extract full value” from the data they own. However, it’s not just planning that’s holding companies back either. The same study found that an additional 36% of businesses were hamstrung – regardless of how thoroughly they’d thought through the project – due to system and resource limitations.

That paints a rather bleak picture, but there’s no reason to despair. The above statistics notwithstanding, there remains plenty that conscientious managers can do to ensure the success and of their integrated sensor and Big Data initiatives.

Start With Specific Use Cases, Then Dig Deeper

Many organizations don’t know how sensors and big data will impact their data centres.

Managers leading sensor and Big Data projects need to outline specific use cases before even thinking about implementation.

A smart manager will have a firm handle on how such technology is likely to impact operations on a day-to-day level – not based on intuition or imagination but on data. Better yet, that manager will set very specific expectations, delivery processes and timelines for what he or she wants to achieve on the back of sensor and big data technology.

For example, let’s say you want to install advanced wireless sensors in your facility. The person leading the project should explicate his or her intention to leverage collected data in order to:

- Diagnose problems with equipment to predict failures.

- Analyze the production efficiency of each asset.

- Determine how many tons of material you process on an hourly basis.

- Monitor worker health and locations across the facility.

Of course, not all data is equal and the lion’s share of that discrepancy will depend on the data type. Data pertaining to equipment functionality, it should be understood, is among the most important and time-sensitive. Such data should be processed immediately given that it can tell the story, in real time, of costly malfunctions.

Some of your data will demand review in real-time, some every half hour and some every other month. Most will find itself living between those poles. Regardless of the data type though, you’ll need to be sure that when you consult it, it conveys something that is actually meaningful and actionable. For this, you’ll need to plan out and gain relative mastery over your processing system.

This means carefully choosing an analytics solution or a combination of complementary solutions. It may also mean hiring an in-house data scientist or simply bringing in outside help for training purposes. In some cases, it will even mean building out an on-site information network. In every case, it will mean instilling a data- and value-driven culture, where employees not only have access to tools but know when and how to properly use them.

Remember, there’s no such thing as too much research and that hype can be as dangerous as it is enticing. Bearing that in mind, the world of IoT is big and wide and waiting for you!

by DavidSpratt | Feb 7, 2018 | ICT

Last week the IT industry was shocked to find that the Intel chipsets that drive many of our phones, smartphones, laptops, desktops and our servers, have an architectural flaw that exposes them to hacking. The other two major chipset manufacturers AMD and ARM appear to be much less affected.

By patching their operating systems to address this design flaw in the Intel chipset the OS providers have been forced to sacrifice the thing that impacts us most, performance. The numbers aren’t out yet but we could be seeing reductions in performance of between 5% and 30%.

For the average user, even a 30% reduction in performance on their laptop or smartphone might not have any discernible impact. Most of us don’t use even a fraction of the capability of our machines and with the consumption of cloud software services such as Office 365 the compute power is in the cloud anyway. But herein lies the problem…

Cloud Service Providers (CSP’s) like Microsoft, Google and Amazon are massive consumers of high-intensity computing power. Every day they wrestle with optimising the cost and performance of their infrastructure environments to deliver to their own and their customers’ needs.

CSP’s have been the first to implement the security patches on their servers, as you would expect from responsible global providers. This is where a 30% performance reduction really hurts. My guess is that their systems architects have been in a right state of panic about how to address the possibility of significant performance degradation unless loads of new equipment is installed in a hurry.

What does this mean to you, the business user of cloud services?

The future performance, and thus the relative cost, of chipsets, will also likely change for the worse in the short to medium term. The security flaw I mentioned earlier was based on the need for manufacturers to make chipsets run faster. This design is now no longer acceptable from a security perspective and will have to change somehow. It will take time for them to come up with a new solution. In the meantime, all those performance/price gains we have come to expect in the past will be very hard won indeed. Expect increases in prices for Intel-based computers and some smartphones.

Expect to see cloud services prices rise as CSP’s move to recover their increased infrastructure overheads both current and into the future

There is little we can do in New Zealand to force a better deal from the big multinationals. Local providers like Datacom and Revera are subject to price/performance challenges as much as the next company and are unlikely to welcome requests for better pricing.

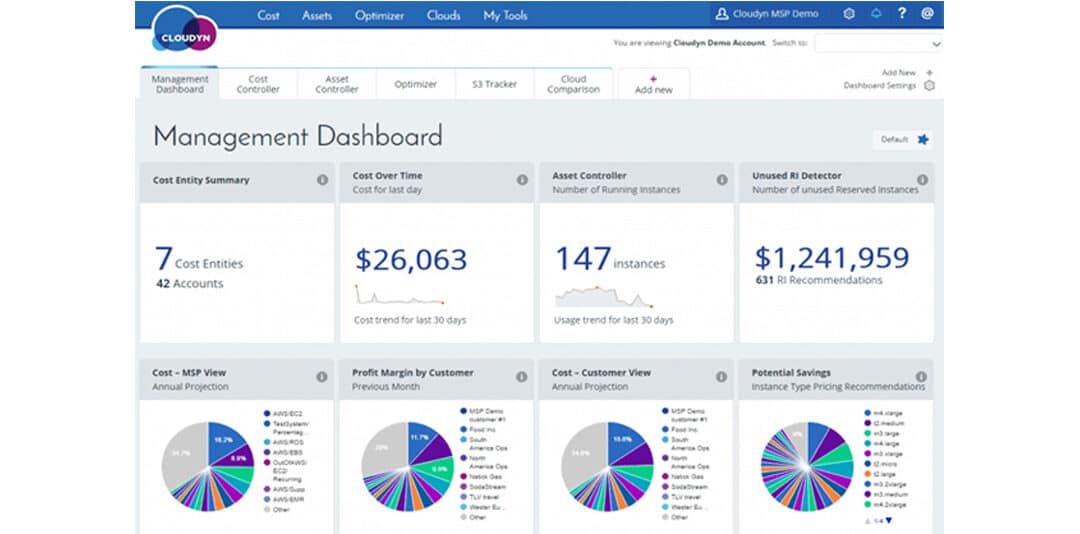

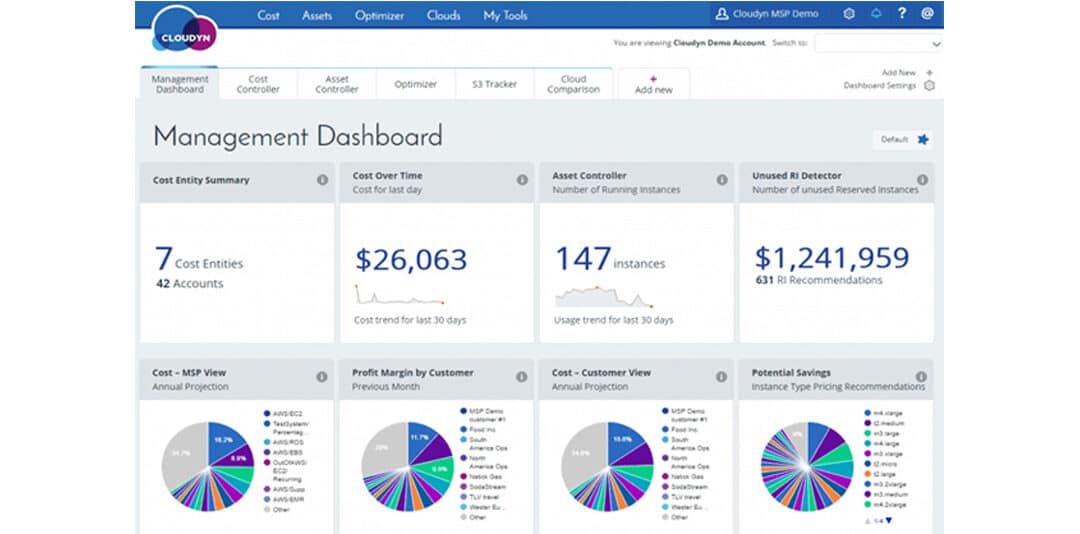

What you can do, though, is to manage cloud computing costs by addressing the issue of consumption through closely monitoring when and how you use cloud computing infrastructure. Microsoft and Amazon both now provide options for paying a lower price for guaranteed future consumption. There is also the option available to purchase services on the spot market. Discounts in this market can be as high as 90% but you must know what you are doing because spot markets go up and down based on demand.

Monitoring and then actively managing cloud consumption requires experience and skill. Rather than trying to do it yourself, you should consider using the services of a utility analytics firm. This will help inform the decisions you make, directly tie cloud computing cost savings to the activity and will allow you to focus on the business at hand rather than on what can be a rapidly changing and complex computing marketplace. Total Utilities has the tools and expertise to deliver real insights and recommendations to help optimise your cloud consumption and cost. For more info click here or contact us today.

by DavidSpratt | Dec 18, 2017 | ICT

Last year I compiled a satirical list of ICT companies that won or lost the battle for the affection of us ICT brokers for businesses. The response was a mixture of simpering sweetness and hate mail (you know who you are).

Nevertheless, I’m doing it again this year, in this article.

To my colleagues who I offend in the next few hundred words, I apologise for my ill humour, even if you have been the authors of your own destruction.

ICT Winners and Losers (a satire)

The Coolest Kids in Town Award: Kordia

Who would have thought that Kordia — formerly government-owned BCL with its unwashed hoard of nerds in walk shorts, sandals, long socks and straggly beards — would have transformed itself over a single decade into the ultra-cool company it is now?

Delivering superb, Ultra-Fast Broadband innovation at impressive price/performance levels and showing market leadership in emerging technologies such as the Internet of Things-enabler Sigfox, it seems maybe geeks can fly after all.

The How Many Fingers am I Holding Up? Award: 2 Degrees

It seems only a year or two ago that 2Degrees offered great coverage to the major cities and a big finger to the provinces.

Millions of dollars and a heck of a lot of hard work later, they now offer something resembling decent coverage at a very competitive price. A player to watch in 2018.

The Spurned Lover Award: Spark Marketing

Please, please, please Spark will you stop telling us how crap you were and how things will get better now that you have sacked half your staff. When all you have left are excuses for your bad behaviour and pleas for forgiveness, maybe it’s time to make a break with the past and move on.

In truth you are damn good at the things that matter and most of us love buying off Kiwi companies (see Kordia and Datacom).

The People’s Choice Award: Datacom

For those of you who have nothing but bad things to say about these guys I have two questions: “Where do the vast majority of the most respected and admired ex- Gen-i and Computerland people work now?” and, “Which other Kiwi success story has grown at over 11 per cent year-on-year for over a decade, while competing head to head against some of the world’s smartest and most successful multi-nationals?”

Datacom has proved, to the tune of $1.2 billion a year in revenues, that people buy off people, even if most of them have grey hair poking out their noses and ears.

The Comeback Kid Award: Samsung Galaxy S8

The best thing since the Nokia flip phone. Great camera, features and battery life. Pity people still think twice before putting it in their pocket.

The Lone Ranger Award: People still investing in DIY IT infrastructure

The No 8 wire mentality that you were so proud of in the 90s is now just a sign of your desire to control everything at the expense of business flexibility.

We know you want to prove that you are more intelligent than everyone else, but have you noticed that the guy who pays your wages and conducts your annual salary review has stopped putting you in front of the business and has informally renamed your section “The Department of No”?

That roaring sound is the jet engine that is public cloud departing with your career prospects.

The Hottie of the Year Award: Samsung Note 7

I was so proud when I took you out of the box and stroked your luscious lines. The screen definition, the camera, the selection of cool software: you made me truly happy for a while.

Then there was the smoke, the heat, the burning sensation in my pocket…has anyone seen the fire extinguisher? Oh, the humanity!

Merry Christmas readers. Talk in the New Year.

David Spratt is a director of Total Utilities. Email [email protected]

by Mike | Nov 14, 2017 | ICT

Telecommunications services are a commodity and this has driven the price of services, especially consumer mobile and fixed broadband, to levels that were only a dream a few years ago.

Here is some context. My 13-year-old son gets more for his $10 per month prepay mobile plan today than I got for my $35 plus handset post-paid plan in the late 1990’s. At that time text messages were 20c each and only available from Vodafone.

My home fibre broadband connection, without a phone attached, costs $95 per month and gives me 103Mbs/sec throughput at quiet times and at the worst times I still see 70Mbs/sec. When I moved into my current house in late 2001 dialup, at 56kbs/sec was all we had.

All this transformation has been very good for the consumer and has driven a massive change in the way we consume telco services. Our household has ditched the fixed line because only telemarketers and political researchers were calling on it. Instead we use mobiles, Skype, Facebook and Whatsapp to communicate locally and internationally. We disconnected traditional Television a year or so ago because of the ads and our entertainment is delivered via the internet consuming Netflix, Youtube and online games on a PS4.

Commoditisation enables competition

The ever increasing commoditisation of services, along with the entry into the market of a large number of new fibre suppliers and telecommunications retailers, has created a highly competitive market place. There are now around 80 companies fighting to provide broadband services to an increasingly demanding client base.

This phenomenon is not unique to Telecommunications. It is very typical in the open market that is New Zealand to find multiple players fighting for share. In another example, the retail electricity market has 32 actively trading retailers. In comparison Texas has 38 retailers for 28 Million people.

Fierce competition has meant a constant driving down of retail pricing. As an example, a Skinny fixed broadband unlimited plan at $68 a month, delivered on 100 Mbps fibre. This price leaves Spark with only $16 out of the $68 after paying Chorus’ wholesale charges.

In response Spark is refocusing towards wireless broadband rather than continuing to fight a “race to zero” price war over fibre based services. Spark are now pushing very hard the idea that connectivity should be wireless and are offering services on their 4G mobile network at the same rates to homes as the ADSL products.

Spark Digital boss Simon Moutter commented at the company annual meeting,

“Spark has reoriented its business towards a wireless future and making a strategic shift from a traditional telco with international interests to being a New Zealand-focused digital services company.“

It now looks like the relationship between Spark and Chorus, which was never an easy relationship, has moved to an all-out war.

Chorus has responded by saying they will become an active wholesaler, pushing their products in the market. This is a big change from being a passive wholesaler. For customers on ADSL there is a real challenge from the 4G fixed cellular broadband. This type of service has been very effective for rural customers who had slow or non-existent broadband available.

What does this mean for telecommunications customers?

I don’t see any downside, if you have fibre in your street, choose a provider and enjoy. If currently have a VDSL connection available, stay on that, the service is high speed and low latency.

For those who are unlucky enough to have ADSL only there are two camps. Some will get good speeds and others will not be so fortunate. If your ADSL is not up to scratch then I believe that the 4G Spark service is an excellent option. With 5G just around the corner, even more options will be available in the near future.

Once again competition has brought better services at lower prices to consumers. It’s the lumbering old Telco’s that are paying the price.